Large Model Algorithm Engineer

|

I was a master's student majoring in Computer Science and Technology at Tongji University, with my supervisor Gang Wei. I was an undergraduate student majoring in Computer Science and Technology at Hohai University, where I once served as the captain of the Hohai University ACM Team. My coaches were Xuejie Zhang and Yun Zhu. My research interests focus on Multimodal Large Models, 3D Computer Vision, and Reinforcement Learning. If you find any research interests that we might share, feel free to drop me an email. |

|

|

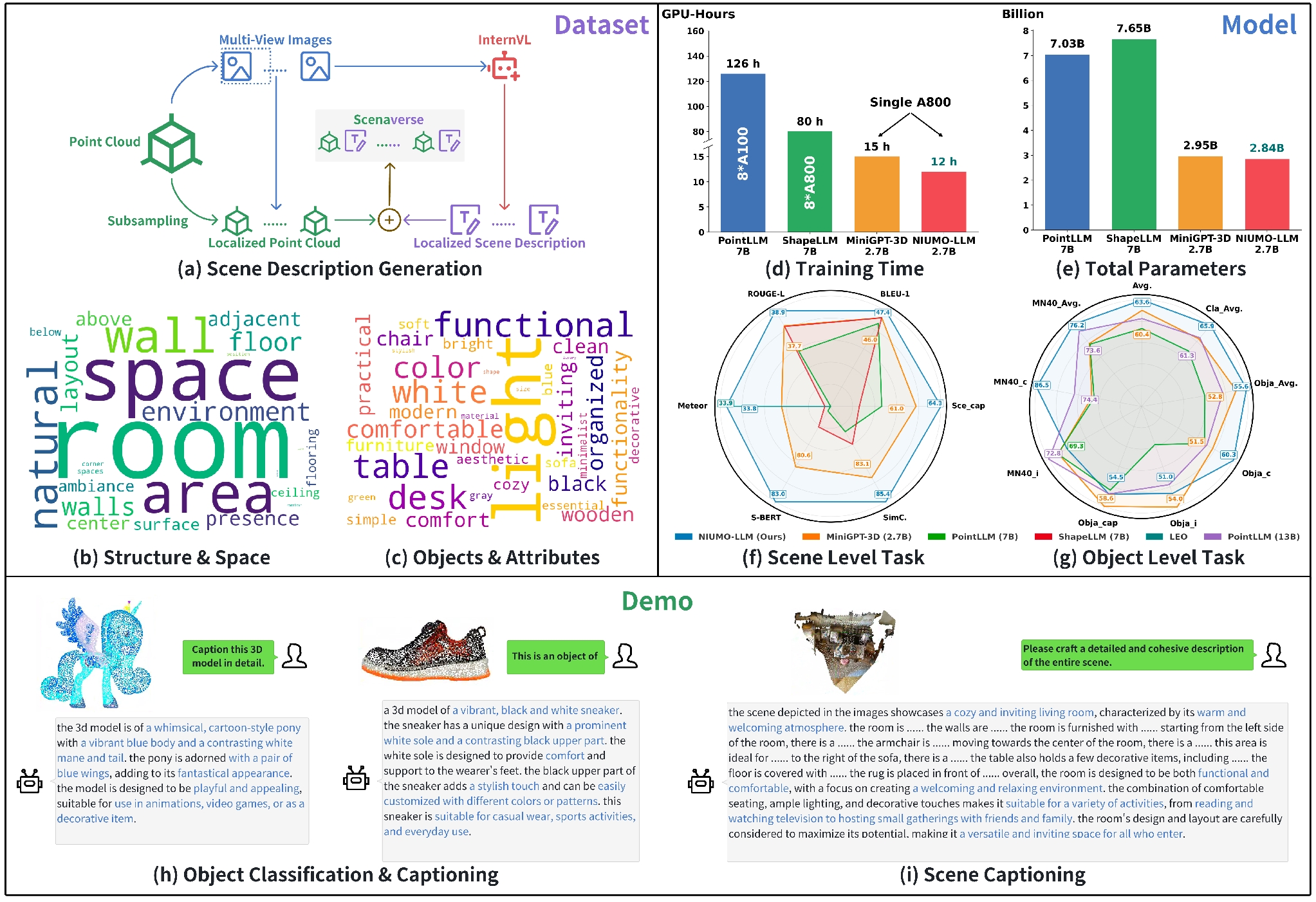

Xiaoyun Hu*, Xiaohan Yan*, Nan Wang, Xiaowei Song, Gang Wei, Zhicheng Wang WACV 2026 We propose HOLO, which includes a large-scale scene description dataset and a lightweight 3D-LLM. |

|

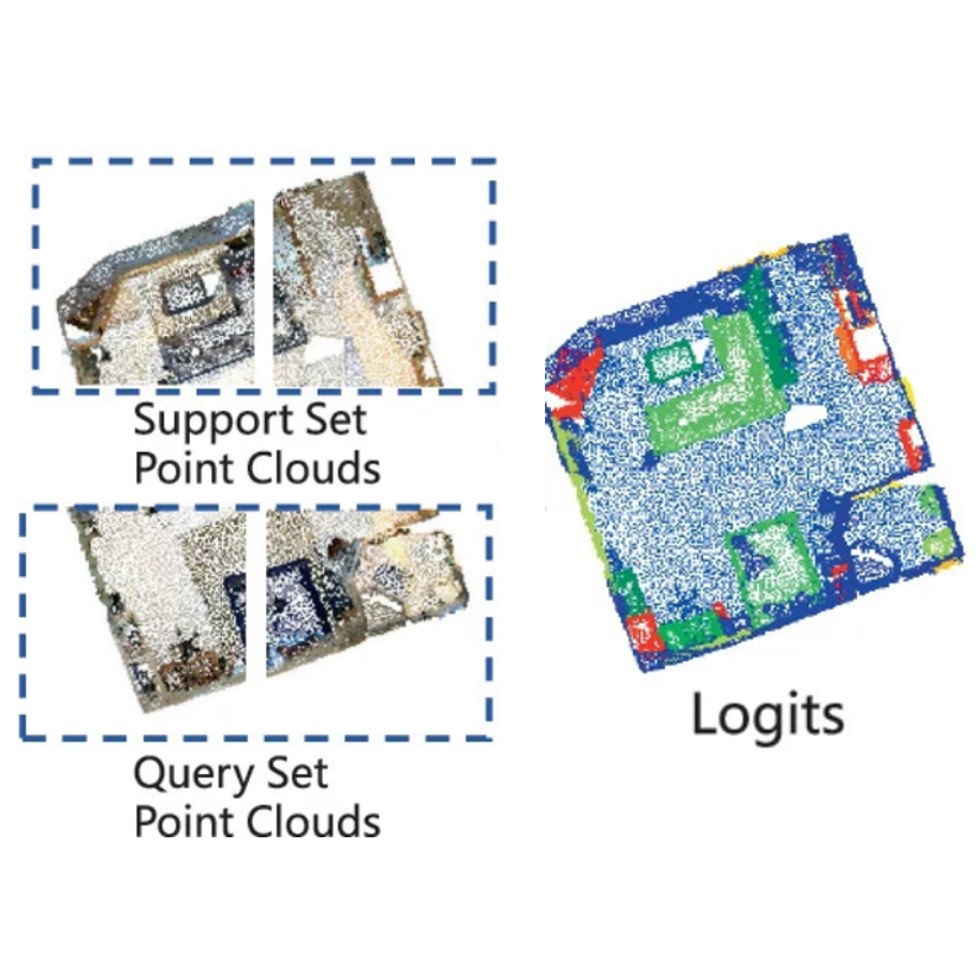

Xiaohan Yan*, Zijian Jiang*, Yinghao Shuai*, Nan Wang, Xiaowei Song, Wenbo Ji, Ge Wu, Jinyu He, Gang Wei, Zhicheng Wang Given 3D point clouds and multi-view RGB-D images with poses, RE0 leverages the 3D geometric information, projection relationships and CLIP semantic features for 3D zero-shot instance segmentation. |

|

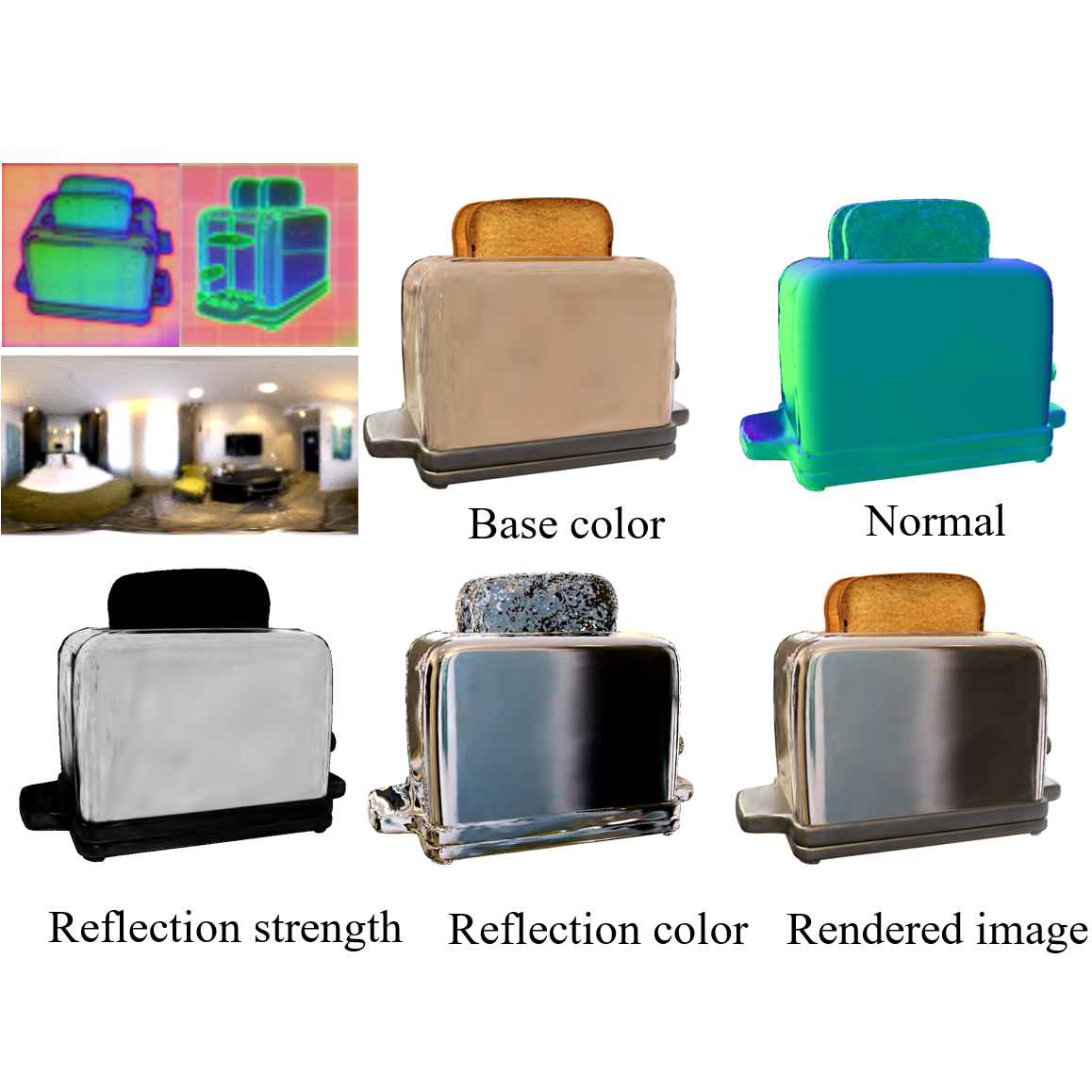

Nan Wang, Xiaohan Yan, Xiaowei Song, Zhicheng Wang We use semantic features derived from 2D foundation model to revolutionize the material property optimization for 3DGS. |

|

Xiaohan Yan, Nan Wang, Xiaowei Song, Gang Wei, Zhicheng Wang We combine local and global features of the structures and performance to perform few-shot point cloud semantic segmentation. |

|

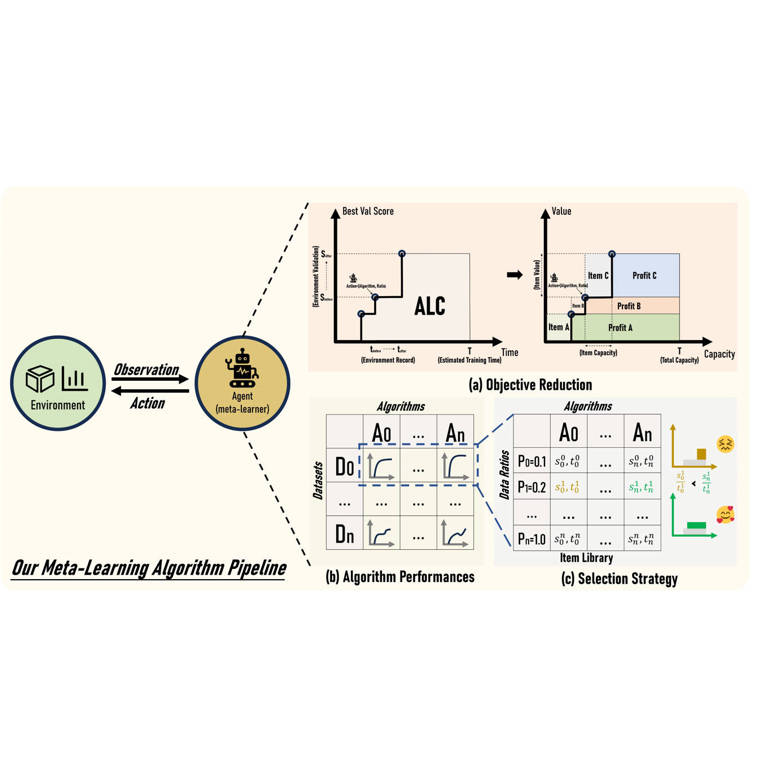

Jinyu He, Xiaowei Song, Xiaohan Yan, Nan Wang, Yuqi Miao, Zijian Jiang, Fei Chao, Yan Zhang, Shengchuan Zhang, Rongrong Ji A key sub-problem: meta-learning from learning curves is a mature but gradually gaining attention area within the field of meta-learning. |

|

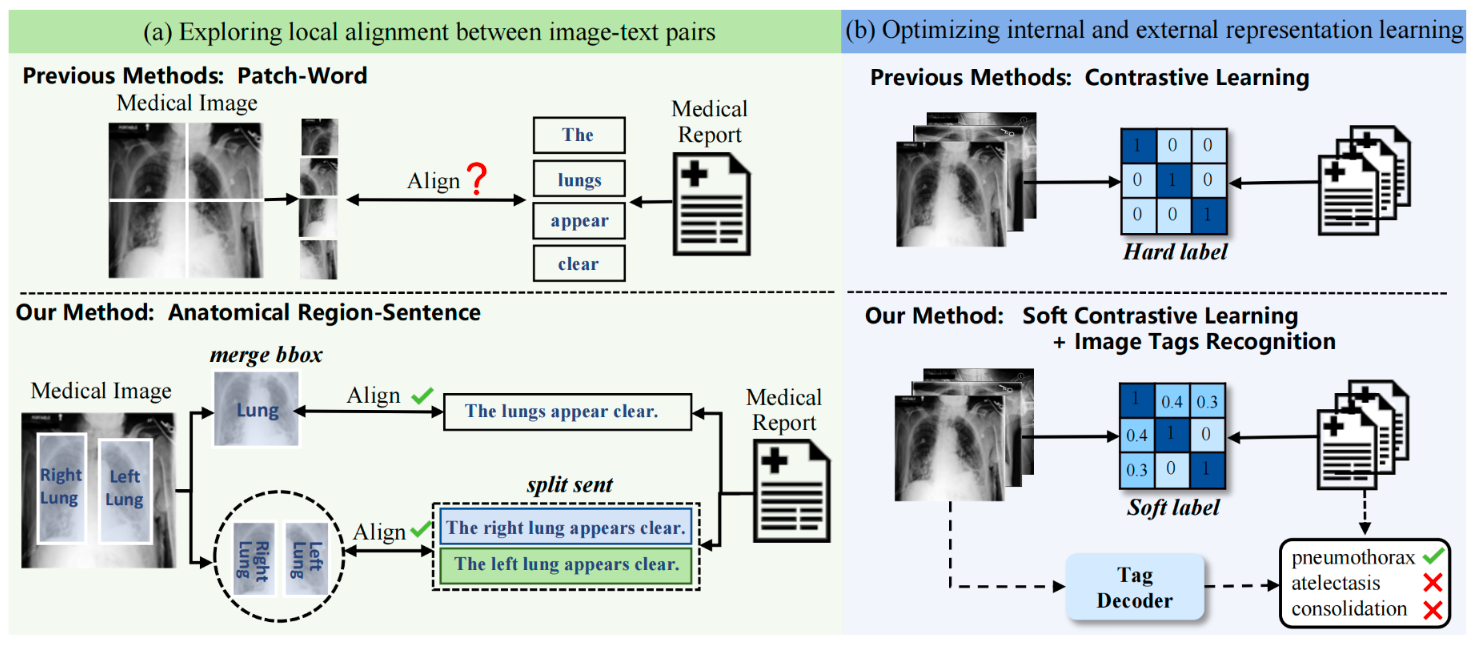

Qingqiu Li, Xiaohan Yan, Jilan Xu, Runtian Yuan, Yuejie Zhang, Rui Feng, Quanli Shen, Xiaobo Zhang, Shujun Wang We propose an anatomical structure-guided framework for medical vision-language pre-training that improves cross-modal alignment. |

|

Xiaohan Yan, Nan Wang, Xiaowei Song, Jinyu He This competition is about fine-tuning large language models on private datasets. Our score is 0.905, ranking in the top 3% worldwide and won Silver Medal in the leaderboard. |